Incomplete Local Training

This type of system heterogeneity means clients may not be able to finish all the local training epochs, which can cause objective inconsistency and degrade the model performance.

To model this type of heterogeneity, we use variable working_amount to control the number of realistic training iterations of clients.

Values of this variable can be arbitrarily set by API Simulator.set_variable(client_ids: List[Any], 'working_amount', values)

Example

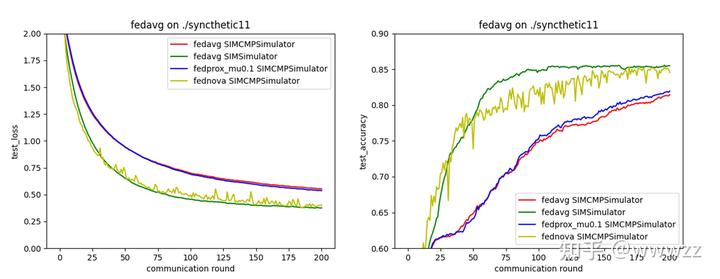

We show the usage of this part by the following example. We use Synthetic dataset and compare baselines FedAvg, FedProx, and FedNova.

import flgo.algorithm.fedavg as fedavg

import flgo.algorithm.fedprox as fedprox

import flgo.algorithm.fednova as fednova

import flgo.experiment.analyzer

import flgo.experiment.logger as fel

import flgo.simulator.base

import flgo.benchmark.synthetic_regression as synthetic

import numpy as np

import os

import flgo.simulator.base

# 1. Construct the Simulator

class CMPSimulator(flgo.simulator.base.BasicSimulator):

def update_client_completeness(self, client_ids):

if not hasattr(self, '_my_working_amount'):

rs = np.random.normal(1.0, 1.0, len(self.clients))

rs = rs.clip(0.01, 2)

self._my_working_amount = {cid:max(int(r*self.clients[cid].num_steps),1) for cid,r in zip(self.clients, rs)}

print(self._my_working_amount)

working_amount = [self._my_working_amount[cid] for cid in client_ids]

self.set_variable(client_ids, 'working_amount', working_amount)

# 2. Create federated task Synthetic(1,1)

task = './syncthetic11'

gen_config = {'benchmark':{'name':synthetic, 'para':{'alpha':1., 'beta':1., 'num_clients':30}}}

if not os.path.exists(task): flgo.gen_task(gen_config, task_path=task)

if __name__ == '__main__':

# 3. sequentically run different runners to eliminate the impact of randomness

option = {'gpu':[0,],'proportion':1.0, 'log_file':True, 'num_epochs':5, 'learning_rate':0.02, 'batch_size':20, 'num_rounds':200, 'sample':'full', 'aggregate':'uniform'}

runner_fedavg_ideal = flgo.init(task, fedavg, option, Logger=fel.BasicLogger)

runner_fedavg_ideal.run()

runner_fedavg_hete = flgo.init(task, fedavg, option, Simulator=CMPSimulator, Logger=fel.BasicLogger)

runner_fedavg_hete.run()

runner_fedprox_hete = flgo.init(task, fedprox, option, Simulator=CMPSimulator, Logger=fel.BasicLogger)

runner_fedprox_hete.run()

runner_fednova_hete = flgo.init(task, fednova, option, Simulator=CMPSimulator, Logger=fel.BasicLogger)

runner_fednova_hete.run()

# visualize the experimental result

# 4. Visualize results

analysis_plan = {

'Selector': {'task': task, 'header':['fedavg', 'fedprox', 'fednova'], 'legend_with':['SIM'] },

'Painter': {

'Curve': [

{

'args': {'x': 'communication_round', 'y': 'test_loss'},

'obj_option': {'color': ['r', 'g', 'b', 'y', 'skyblue']},

'fig_option': {'xlabel': 'communication round', 'ylabel': 'test_loss',

'title': 'fedavg on {}'.format(task), 'ylim':[0,2]}

},

{

'args': {'x': 'communication_round', 'y': 'test_accuracy'},

'obj_option': {'color': ['r', 'g', 'b', 'y', 'skyblue']},

'fig_option': {'xlabel': 'communication round', 'ylabel': 'test_accuracy',

'title': 'fedavg on {}'.format(task), 'ylim':[0.6,0.9]}

},

]

},

'Table': {

'min_value': [

{'x': 'train_loss'},

{'x':'test_loss'}

],

'max_value': [

{'x':'test_accuracy'}

]

},

}

flgo.experiment.analyzer.show(analysis_plan)

The results below show that FedNova achieve similar performance under severe data heterogeneity and outperforms other methods, which are consistent with results in FedNova's paper.