Varying Client Responsiveness

In FL, the communication costs and local training costs can vary across clients. From a view of server, it will only sense the total time costs of each client starting from it sends the global model to it. Therefore, we define the client responsiveness as the time interval between the server sending the global model to it and the server receiving the local results. One important impact of varying client responsiveness is that a synchronous server shuold wait for the slowest client at each communication round, resulting in large time costs.

In FLGo, we model the client responsiveness of all the clients by the variable latency in our Simulator.

To update the responsiveness of the selected clients, one should change the variable by

self.set_variable(client_ids:List[Any], var_name:str='latency', latency_values:list[int])

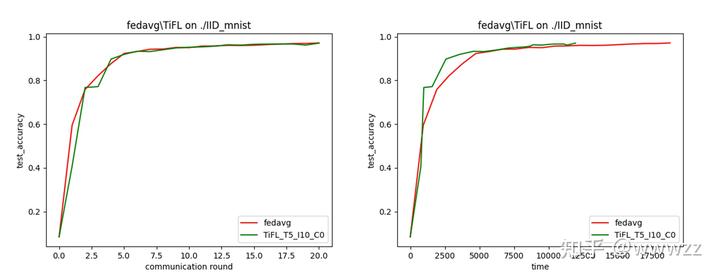

Now we show the usage of setting heterogeneity in client responsiveness by the following example. To make a comparison against FedAvg, we adapt a time efficient mehtod, TierFL, to show the impact of heterogeneity.

Example

import os

import numpy as np

import flgo.simulator.base

# 1. let the clients' responsing time obey UNIFORM(5,1000)

class RSSimulator(flgo.simulator.base.BasicSimulator):

def initialize(self):

# init static initial reponse time

self.client_time_response = {cid: np.random.randint(5, 1000) for cid in self.clients}

self.set_variable(list(self.clients.keys()), 'latency', list(self.client_time_response.values()))

def update_client_responsiveness(self, client_ids):

latency = [self.client_time_response[cid] for cid in client_ids]

self.set_variable(client_ids, 'latency', latency)

# 2. generate federated task

import flgo.benchmark.mnist_classification as mnist

import flgo.benchmark.partition as fbp

task = './IID_mnist'

gen_config = {

'benchmark': mnist,

'partitioner': fbp.IIDPartitioner

}

if not os.path.exists(task): flgo.gen_task(gen_config, task_path=task)

# 3. Customize Logger to record time cost

import flgo.experiment.logger as fel

class MyLogger(fel.BasicLogger):

def log_once(self, *args, **kwargs):

self.info('Current_time:{}'.format(self.clock.current_time))

super(MyLogger, self).log_once()

self.output['time'].append(self.clock.current_time)

if __name__ == '__main__':

# 4. run fedavg and TiFL for comparison

import flgo.algorithm.fedavg as fedavg

import flgo.algorithm.TiFL as TiFL

runner_fedavg = flgo.init(task, fedavg, {'gpu':[0,],'log_file':True, 'num_epochs':1, 'num_rounds':20}, Logger=MyLogger, Simulator=RSSimulator)

runner_fedavg.run()

runner_tifl = flgo.init(task, TiFL, {'gpu':[0,],'log_file':True, 'num_epochs':1, 'num_rounds':20}, Logger=MyLogger, Simulator=RSSimulator)

runner_tifl.run()

# 5. visualize the results

import flgo.experiment.analyzer

analysis_plan = {

'Selector': {'task': task, 'header':['fedavg', 'TiFL'] },

'Painter': {

'Curve': [

{

'args': {'x': 'communication_round', 'y': 'test_accuracy'},

'obj_option': {'color': ['r', 'g', 'b', 'y', 'skyblue']},

'fig_option': {'xlabel': 'communication round', 'ylabel': 'test_accuracy',

'title': 'fedavg\TiFL on {}'.format(task)}

},

{

'args': {'x': 'time', 'y': 'test_accuracy'},

'obj_option': {'color': ['r', 'g', 'b', 'y', 'skyblue']},

'fig_option': {'xlabel': 'time', 'ylabel': 'test_accuracy',

'title': 'fedavg\TiFL on {}'.format(task)}

},

]

},

'Table': {

'max_value': [

{'x':'test_accuracy'},

{'x': 'time'},

]

},

}

flgo.experiment.analyzer.show(analysis_plan)

The results suggest that TiFL save nearly 40% time costs and achieve similar performance under the same communication rounds