Index

Algorithm Integration

We have already implemented 10+ SOTA algorithms in recent years' top tiers conferences and tiers.

| Method | Reference | Publication | Tag |

|---|---|---|---|

| FedAvg | [McMahan et al., 2017] | AISTATS' 2017 | |

| FedAsync | [Cong Xie et al., 2019] | Asynchronous | |

| FedBuff | [John Nguyen et al., 2022] | AISTATS 2022 | Asynchronous |

| TiFL | [Zheng Chai et al., 2020] | HPDC 2020 | Communication-efficiency, responsiveness |

| AFL | [Mehryar Mohri et al., 2019] | ICML 2019 | Fairness |

| FedFv | [Zheng Wang et al., 2019] | IJCAI 2021 | Fairness |

| FedMgda+ | [Zeou Hu et al., 2022] | IEEE TNSE 2022 | Fairness, robustness |

| FedProx | [Tian Li et al., 2020] | MLSys 2020 | Non-I.I.D., Incomplete Training |

| Mifa | [Xinran Gu et al., 2021] | NeurIPS 2021 | Client Availability |

| PowerofChoice | [Yae Jee Cho et al., 2020] | arxiv | Biased Sampling, Fast-Convergence |

| QFedAvg | [Tian Li et al., 2020] | ICLR 2020 | Communication-efficient,fairness |

| Scaffold | [Sai Praneeth Karimireddy et al., 2020] | ICML 2020 | Non-I.I.D., Communication Capacity |

Benchmark Gallary

| Benchmark | Type | Scene | Task |

|---|---|---|---|

| CIFAR100 | image | horizontal | classification |

| CIFAR10 | image | horizontal | classification |

| CiteSeer | graph | horizontal | classification |

| Cora | graph | horizontal | classification |

| PubMed | graph | horizontal | classification |

| MNIST | image | horizontal | classification |

| EMNIST | image | horizontal | classification |

| FEMINIST | image | horizontal | classification |

| FashionMINIST | image | horizontal | classification |

| ENZYMES | graph | horizontal | classification |

| text | horizontal | classification | |

| Sentiment140 | text | horizontal | classification |

| MUTAG | graph | horizontal | classification |

| Shakespeare | text | horizontal | classification |

| Synthetic | table | horizontal | classification |

Async/Sync Supported

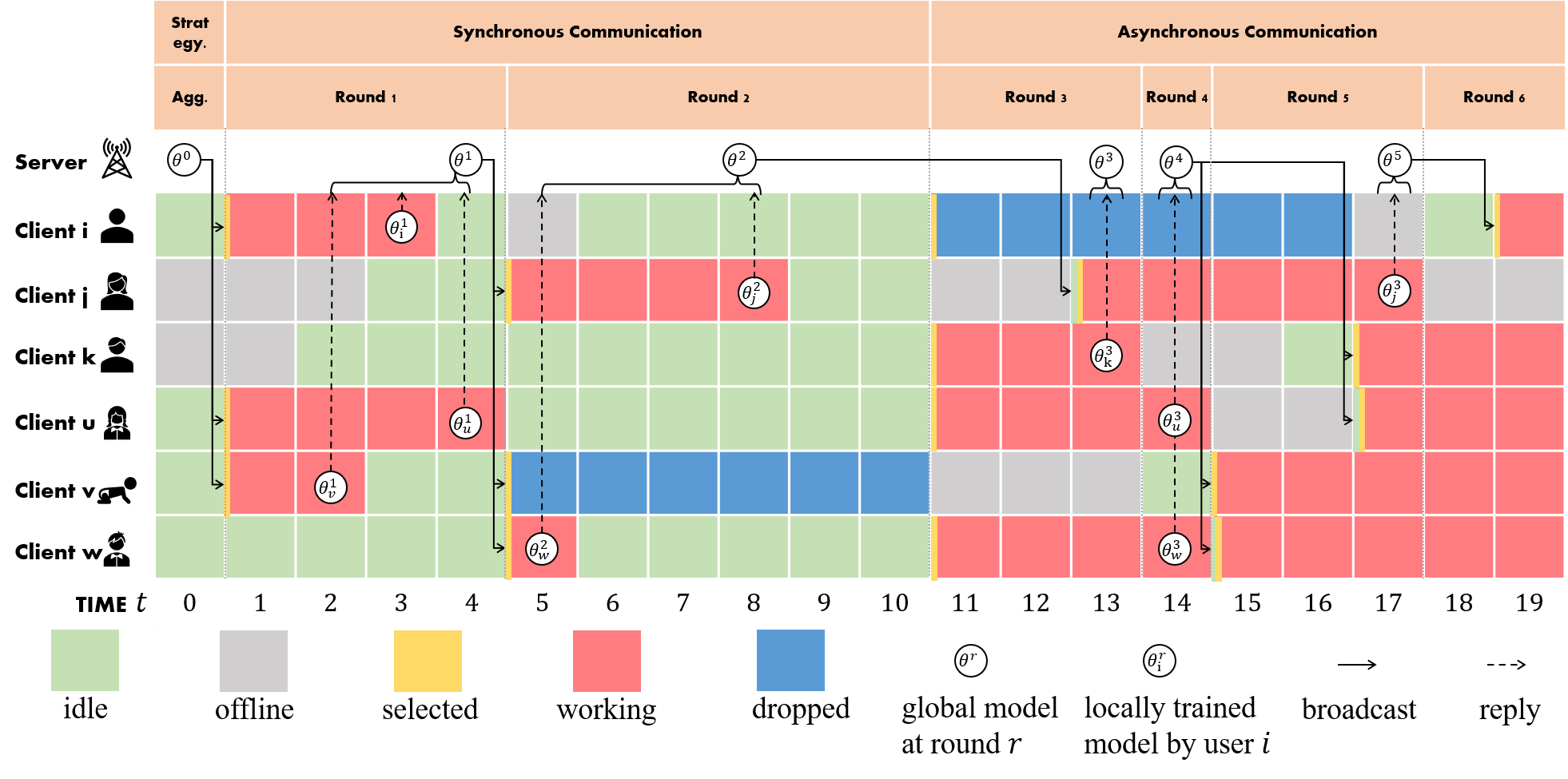

We set a virtual global clock and a client-state machine to simulate a real-world scenario for comparison on asynchronous and synchronous strategies. Here we provide a comprehensive example to help understand the difference between the two strategies in FLGo.

For synchronous algorithms, the server would wait for the slowest clients.

In round 1,the server select a subset of idle clients (i.e. client i,u,v)

to join in training and the slowest client v dominates the duration of this

round (i.e. four time units). If there is anyone suffering from

training failure (i.e. being dropped out), the duration of the current round

should be the longest time that the server will wait for it (e.g. round 2 takes

the maximum waiting time of six units to wait for response from client v).

For synchronous algorithms, the server would wait for the slowest clients.

In round 1,the server select a subset of idle clients (i.e. client i,u,v)

to join in training and the slowest client v dominates the duration of this

round (i.e. four time units). If there is anyone suffering from

training failure (i.e. being dropped out), the duration of the current round

should be the longest time that the server will wait for it (e.g. round 2 takes

the maximum waiting time of six units to wait for response from client v).

For asynchronous algorithms, the server usually periodically samples the idle clients to update models, where the length of the period is set as two time units in our example. After sampling the currently idle clients, the server will immediately checks whether there are packages currently returned from clients (e.g. the server selects client j and receives the package from client k at time 13).

Experimental Tools

For experimental purposes

Automatical Tuning

Multi-Scene (Horizontal and Vertical)

Accelerating by Multi-Process

References

[Cong Xie. et al., 2019] Cong Xie, Sanmi Koyejo, Indranil Gupta. Asynchronous Federated Optimization.

[John Nguyen. et al., 2022] John Nguyen, Kshitiz Malik, Hongyuan Zhan, Ashkan Yousefpour, Michael Rabbat, Mani Malek, Dzmitry Huba. Federated Learning with Buffered Asynchronous Aggregation. In International Conference on Artificial Intelligence and Statistics (AISTATS), 2022.

[Mehryar Mohri. et al., 2019] Mehryar Mohri, Gary Sivek, Ananda Theertha Suresh. Agnostic Federated Learning.In International Conference on Machine Learning(ICML), 2019

[Zheng Wang. et al., 2021] Zheng Wang, Xiaoliang Fan, Jianzhong Qi, Chenglu Wen, Cheng Wang, Rongshan Yu. Federated Learning with Fair Averaging. In International Joint Conference on Artificial Intelligence, 2021

[Zeou Hu. et al., 2022] Zeou Hu, Kiarash Shaloudegi, Guojun Zhang, Yaoliang Yu. Federated Learning Meets Multi-objective Optimization. In IEEE Transactions on Network Science and Engineering, 2022

[Tian Li. et al., 2020] Tian Li, Anit Kumar Sahu, Manzil Zaheer, Maziar Sanjabi, Ameet Talwalkar, Virginia Smith. Federated Optimization in Heterogeneous Networks. In Conference on Machine Learning and Systems, 2020

[Xinran Gu. et al., 2021] Xinran Gu, Kaixuan Huang, Jingzhao Zhang, Longbo Huang. Fast Federated Learning in the Presence of Arbitrary Device Unavailability. In Neural Information Processing Systems(NeurIPS), 2021

[Yae Jee Cho. et al., 2020] Yae Jee Cho, Jianyu Wang, Gauri Joshi. Client Selection in Federated Learning: Convergence Analysis and Power-of-Choice Selection Strategies.

[Tian Li. et al., 2020] Tian Li, Maziar Sanjabi, Ahmad Beirami, Virginia Smith. Fair Resource Allocation in Federated Learning. In International Conference on Learning Representations, 2020